Machine Learning and Physics

Machine Learning - A very short introduction

Machine Learning (ML) is a particular branch of a very broad discipline called Artificial Intelligence (AI). Whereas AI tries to solve the fundamental problem of "creating" a sentient or intelligent being from a silicon-based machine, or anything you could define as artificial in general, the problem with ML is somehow simpler.

The purpose of ML is, in fact, creating algorithms that are alternative to the usual way one would program a machine, to solve a problem. In a standard code, the computer runs a series of instructions one after the other, that is to say, it behaves like a blind slave who does exactly what he's told to do. After the execution of the code, the computer will have learnt nothing about the problem it was used to solve and will have no knowledge of the data that were passed to it. Therefore, the next time you will run the code on another set of parameters, your program will go exactly through the same sequence of steps, without there being a solution of continuity to this process.

This is a very simple strategy for solving problems that are structured in a repetitive and simple way. This approach, however, fails when you apply it to something that for your brain would be extremely easy. As a banal example, if I asked you to compute the following sum to you or a seven-year-old kid, you would be able to do it practically instantly

4+5=?

On the other hand, if I were to ask you to compute the following sum, it would most likely take you more time than the previous one, and an awful lot of time more time than it would take a computer.

1935428762456 + 42649955246 = ?

Yet, the practical things you have to do are exactly the same. This is because computers are extremely optimised to perform arithmetical operations such as the one above, and can do that in a minuscule fraction of a second. Our brains are, however, specialised in other types of operations. For instance, here's a picture of a red panda, one of my favourite animals living on this planet.

Image from the Binder Park Zoo website

If I asked you which of the following pictures has a red panda in, you would be able to give me an answer immediately. A computer programmed in the usual way would not be able to give me a sensible answer at all, it could only retrieve a random choice.

Images by Joseph Nebus and Mathias Appel (Flickr) respectively

This happens because if you had no idea what a red panda looked like before you saw the picture above, after having experienced that picture, you now have a knowledge of what that animal looks like. In the second iterations of my showing pictures to you, you had stored this information in your brain, and you were able to use it to answer the question. That is to say, your "state" before and after looking at that first picture and being told what it contained changed.

As said previously, a normal implementation of a program on a computer is not able to do this, it could only scan through the pixel and try to find a previously defined pattern to match. This could cover one particular image, but it won't be enough to try and find a red panda in any image I supply to the program, which is however what your brain would be able to do. We can exploit, however, a similar approach to what your brain is doing, that is to say, we want to try and find a way to let the computer memorise what characteristics a picture must have in order to contain a red panda.

Artificial Neural Networks - A way to mimic the human brain

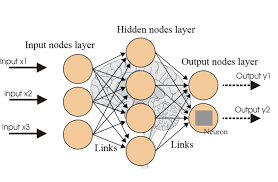

An approach that can be used to solve such problems on a computer is to use so-called Artificial Neural Networks (ANN). These are structures that were first thought of in the 1950s, and later developed and put to practical use in recent years thanks to the advancements in computational power and memory access. Their structure, at its basis, is a series of layers of so-called neurons. Neurons are simple objects that take many inputs, make a weighted average of them (that is to say, they sum the product of each of the values with a corresponding weight), and then compute a function of this result.

Image from https://www.analyticsvidhya.com/blog/2016/08/evolution-core-concepts-dee...

The overall structure of the network might look like the picture above, where each of the circles is a neuron. In this kind of ANN, I am using 3 inputs (numbers) to compute 2 outputs. One can, therefore, think about passing an image via passing each of its pixels as inputs, and retrieve one output that tells them whether the image contains a red panda or not, or more in general, whether the ANN has found anything in it. Although a real-life ANN that performs this operation needs to be more complicated than that, the core ideas are the same.

The way the ANN structure learns (for instance) to recognise if a particular animal is in a picture is by being provided with a series of pictures, and being told if they contain that animal or not. For each of these pictures, the ANN algorithm will guess whether it contains an animal and will compare its result with the given (right) one. If there is a difference between the two results, the weights of all the layers will be changed, so that the answer the ANN would have given matches the right one. This is repeated for every picture in a sample. By this repetition, the ANN will form a core structure of weigths that contains abstract information on whether the animal is in a or not. The ANN will be able to recognise, thanks to the "trained weights" whether the particular animal is in any of the pictures in the sample we have used to train the ANN. Therefore, when provided with new images, it will give an answer that is based on its experience, just like we would do. Therefore, after having trained the ANN, which basically means having run this process long enough for the weights to be exact for it to give the right answer an acceptable number of times, we can then deploy this ANN and its weight on however many images we want, expecting a good number of outcomes to be exact.

Since AI experts have seen a real possibility of application of ANN for many problems, they have been exploited in many a sector, producing a lot of surprising outcomes. A few examples include

- Composition of music https://www.youtube.com/watch?v=HAfLCTRuh7U

- Write fiction https://www.theverge.com/2017/12/12/16768582/harry-potter-ai-fanfiction (although the result is by no means good, it's amazing to think a program wrote this)

- Play Go https://www.youtube.com/watch?v=vFr3K2DORc8

As well as many other examples. It is straightforward to see a development in many applications starting with the simple problems we discussed above. For instance, self-driving cars are now able to recognise vehicles and any sort of object lying around or moving on the road, thanks to ANN technology. Therefore, we can ask ourselves, as scientists, whether ANN can be used for scientific purposes as well as utilitarian ones.

Scientific uses of Machine Learning

ML, and Neural Networks, in particular, have been used more and more often is a wide range of applications, that deviate from the mere pattern recognition, or image classification. In many a sector of physics, for instance, a rise has been observed in the attempts at using ML applications to automate work usually done by scientists.

The need for automation has increased in the last years due to the increasing dimensionality of data scientists would have to examine. Disciplines like Astronomy, that have to deal with images (in the broader sense of the term) are currently exploiting ML techniques for classifications of images, that are not too dissimilar from the ones discussed in the model above.

Even in our group at the Cyprus Institute, Srijit Paul, a fellow in the programme preceding ours (HPC-LEAP) has collaborated in using machine learning techniques to study phase transitions in an Ising Model. This is a very classical problem that works like a toy model for how magnets work. It is possible to simulate their behaviour by having a grid structure of arrows that can be - for instance - either turned up or down. The proximity of equally facing arrows influences the energy of the system and, at a given temperature, it is possible to simulate how the system would behave in reaching the so-called ground state, that is to say, the state at lowest possible energy, which would be the state one observes most likely in nature, as this has the tendency to prefer objects in their lowest possible energy state. Varying the temperature, it is possible to observe two very different behaviours, that can be identified with a phase at which the material has magnetic properties and a phase at which it does not. Recognising the transition phase can be pretty easily done by computing observables from the ensemble of arrows one has. In their work (https://arxiv.org/pdf/1903.03506.pdf), they, however, show how it is possible to use ML techniques to estimate the temperature at which the transition between the phases happens, the so-called "critical temperature", as well as other quantities.

On a more fundamental scale, some scientists were able to construct a ML algorithm that was able to extrapolate Newton's Law of motion by looking at the motion of a pendulum. This is something that could revolutionise how we think about science, since a machine was able to predict, starting from data and a set of mathematical operations, the link between observation and a fundamental law of nature. (https://www.wired.com/2009/04/newtonai/)

It is fair to predict that the attempts will not stop here and we will see a rise in the number of people trying to use ML to both automatise work and help scientists make fundamental discoveries.